Claude 3.5 Sonnet, Imbue 70B, and models of remembering and forgetting

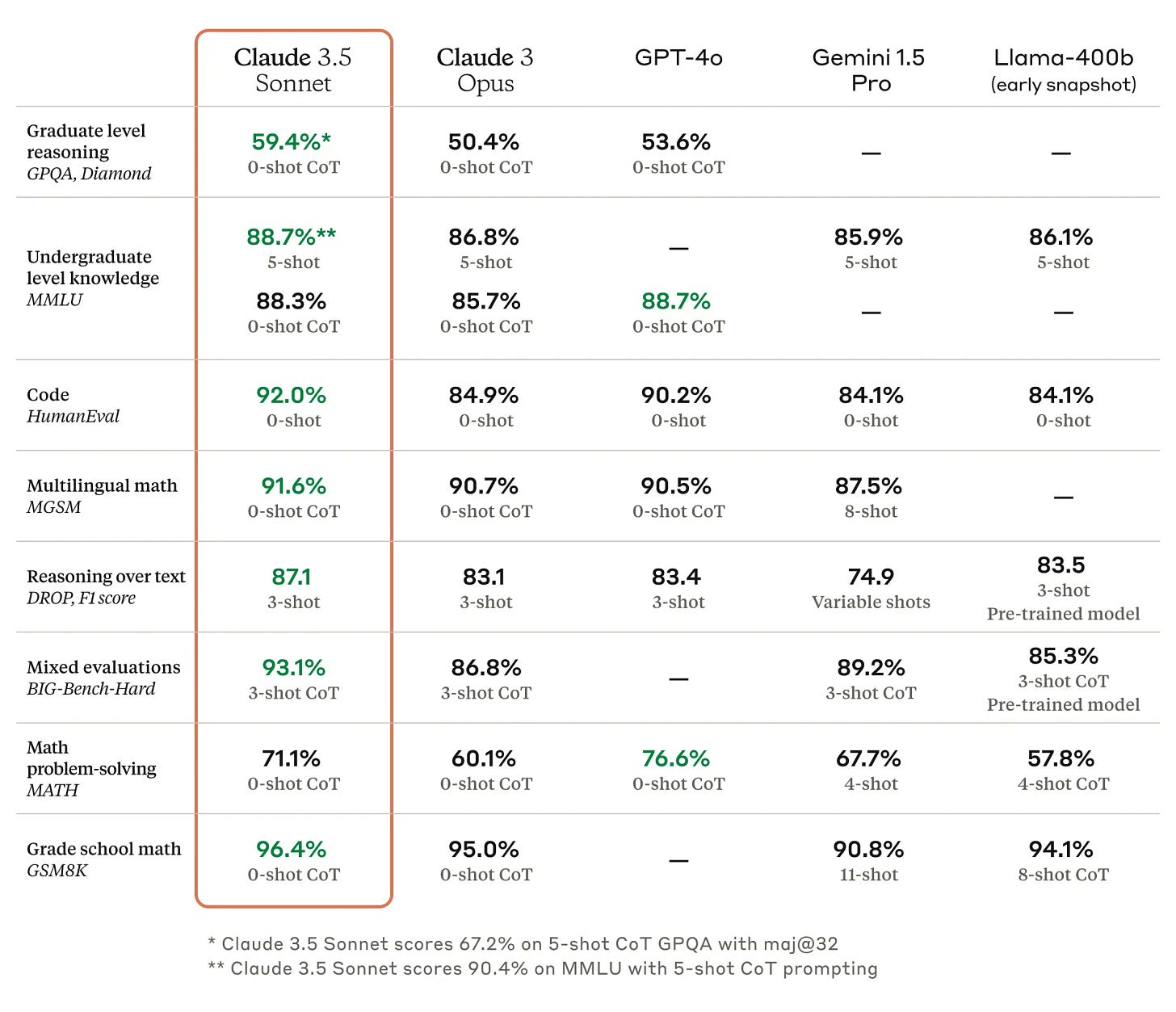

Last week Anthropic launched Claude 3.5 Sonnet, with particularly notable improvements in graduate-level reasoning, code, and mixed evaluations (BIG-Bench Hard, which, contrary to popular belief, is not what I say when watching guys bench press at the gym, but rather 23 particularly challenging BIG - Beyond the Imitation Game -Bench tasks).

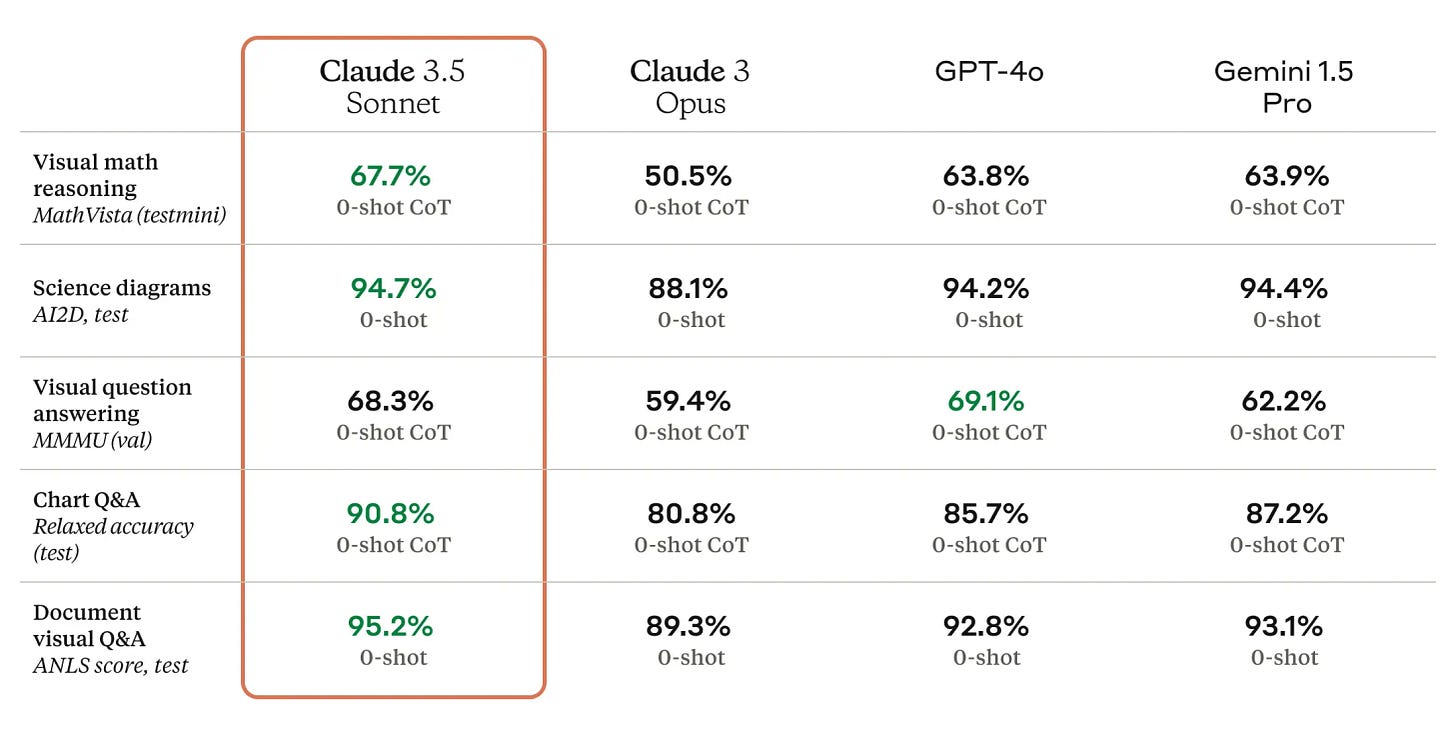

Claude 3.5 Sonnet also raises the bar in visual reasoning (visual math, science diagrams — giving some real weight to full-fledged Apple partnership rumors especially in light of iOS 18). I conducted an extensive research audit (read: Google, Twitter and Reddit search) trying to reverse engineer any modeling techniques that drive these improvements in particular, but it seems (understandably) proprietary.

Anthropic does reiterate their commitment to safety and privacy, still rating Claude 3.5 Sonnet at ASL-2: “systems that show early signs of dangerous capabilities - for example ability to give instructions on how to build bioweapons - but where the information is not yet useful due to insufficient reliability or not providing information that e.g. a search engine couldn’t” (current LLMs), takes a Moore’s law-esque stance to “substantially improve the tradeoff curve between intelligence, speed, and cost every few months” and mentions “exploring features like Memory, which will enable Claude to remember a user’s preferences and interaction history as specified, making their experience even more personalized and efficient”.

Meanwhile, Imbue has taken an infrastructure-first marketing approach with their 70B parameter model that outperforms “zero-shot GPT-4o on reasoning-related tasks”, replete with stress and networking tests on “4,092 [NVIDIA] H100 GPUs spread across 511 computers, with eight GPUs to a computer” on “fully non-blocking” InfiniBand network topology: “every GPU could, in theory, simultaneously talk to another GPU at the maximum rate.”

Notably:

It would have been far slower to send data over Ethernet because data would first travel from the GPU to the CPU, and then out one of the 100 Gbps Ethernet cards.

An amusing parallel I like to draw here is that Anthropic is becoming to Imbue what Config was to the DAC Chips to Systems Conference this week in SF: occupying the same noisy, high-volatility but high-growth field, at effectively opposite ends of the target audience spectrum. As with most things, there is value at the intersection, but it’ll take time and patience to let the chips fall where they may.

How memory works

A friend of mine sent me this article recently, which mentions the extremes of the human memory spectrum, HSAM (highly superior autobiographical memory) and SDAM (severely deficient autobiographical memory), and the author’s own experience as a “Forgetter” in a world of both Forgetters and Rememberers:

Although it’s impossible to say if being a Forgetter has informed parts of my personality or just enhanced preexisting qualities, I do think my inability to remember has allowed for easier passage in certain ways… My father, who is a Rememberer, says his nostalgia often borders on unbearable.

One Rememberer tells me she always considered herself to be someone with a good memory, so she worked hard at it and kept a calendar of daily events that she could look back on. “Because people rely on me to know that information,” she says. Forgetter Henry, meanwhile, said that while he once tried harder to remember things, he has pretty much given up.

I am a notorious Forgetter. My friends will often have to remind me of things that we did last week, last year, how we met, and otherwise formative experiences that are truly embarrassing for me to have forgotten given how much I care about the people in my life. After reading this article, though, I started writing out a personal and family history, a way to “practice remembering”, and realized how much of my life I could and did remember, how many long-unspoken memories and experiences (especially from my childhood) shaped me in profound ways and continue to serve as core parts of my personality. Certainly, a lot of these are insecurities that I now have a stronger foundation to work through. But there are also memories I’ve unearthed with this exercise that remind me of how blessed I’ve been, what opportunities I’ve both stumbled into and chased after tirelessly, that have given me a renewed appreciation and gratitude for what the world has given to me and desire to provide the same to others.

This paper (feat. Mikel Artetxe, Reka AI) made semi-sensationalist AI coverage rounds earlier this year by proposing active forgetting (resetting the embedding layer periodically during pretraining) with particular applications in non-English languages:

“Human memory in general is not very good at accurately storing large amounts of detailed information. Instead, humans tend to remember the gist of our experiences, abstracting and extrapolating,” said Benjamin Levy, a neuroscientist at the University of San Francisco. “Enabling AI with more humanlike processes, like adaptive forgetting, is one way to get them to more flexible performance.”

Generally, I find neuroplasticity (“the ability of the nervous system to change its activity in response to intrinsic or extrinsic stimuli by reorganizing its structure, functions, or connections”) to be under-studied and under-modeled as plasticity in neural networks. A very simple and reasonable explanation for this is that the purpose of state-of-the-art ML modeling is and has always been performance- (e.g. accuracy/precision/recall) and now cost- and speed-driven for tangible applications. Quantum computing, which conceptually (as it is much more intrinsically probabilistic, uncertain, and chaotic) may have a better basis of modeling a true human brain (with customizable remembering/forgetting capabilities) in a model, is still at least some years out from a real-world breakthrough.

While I’m specifically not advocating for a future where humans and machines are truly indistinguishable, I wonder if there is under-represented value in building a more accurate machine model of human memory for the sake of understanding the human brain itself. This week we saw huge advances in biological foundation models reflecting decades of truly inspiring work bridging understanding across biologists and computational scientists. Just as I discovered latent connections in my own brain via an active learning exercise and found surprising utility in approaching emotional pathways as a reinforcement learning problem, perhaps the same can be modeled at billions- and trillions-scale to uncover personalized therapeutic pathways for others.